Artificial intelligence (AI) is constantly reshaping our lives. It saves companies and us time and money, but it has applications that can be applied to medicine, potentially saving our lives.

We can understand AI’s evolution and achievements to model future developmental strategies. One of AI’s most significant medical impacts is already being seen in and will continue in oncology.

AI has opened essential opportunities for cancer patient management and is being applied to aid in the fight against cancer on several fronts. We will look into these and see where AI can best aid doctors and patients in the future.

Where Did AI Come From?

Alen Turing first conceived the idea of computers mimicking critical thinking and intelligent behavior in 1950, and by 1956 John McCarthy came up with the term Artificial Intelligence (AI).

AI started as a simple set of “if A then B” computing rules but has advanced dramatically in the years since, comprising complex multi-faceted algorithms modeled after and performing similar functions to the human brain.

AI and Oncology

AI has now taken hold in so many aspects of our lives that we often do not even realize it. Yet, it remains an emerging and evolving model that benefits different scientific fields, including a pathway of aid to those who manage cancer patients.

AI has a specific task that it excels at. It is especially good at recognizing patterns and interactions after being given sufficient training samples. It takes the training data to develop a representative model and uses that model to process and aid decision-making in a specific field.

When applied to precision oncology, AI can reshape the existing processes. It can integrate a large amount of data obtained by multi-omics analysis. This integration is possible because of advances in high-performance computing and several novel deep-learning strategies.

Notably, applications of AI are constantly expanding in cancer screening and detection, diagnosis, and classification. AI is also aiding in the characterization of cancer genomics and the analysis of the tumor microenvironment, as well as the assessment of biomarkers for prognostic and predictive purposes. AI has also been applied to follow-up care strategies and drug discovery.

Machine Learning and Deep Learning

To better understand the current and future roles of AI, two essential terms fall under the AI umbrella that must be clearly defined: machine learning and deep learning.

Machine Learning

Machine learning is a general concept that indicates the ability of a machine (a computer) to learn and therefore improve patterns and models of analysis.

Deep Learning

On the other hand, deep learning is a machine learning method that utilizes algorithmic systems that mimic a system of biological neurons called deep networks. When finalized, these deep networks have high predictive performance.

Both machine and deep learning are central to the AI management of cancer patients.

Current Applications of AI in Oncology

To understand the roles and potential of AI in managing cancer patients and show where the future uses of AI can lead, here are some of the current applications of AI in oncology.

With the below charts, “a” refers to oncology and related fields and “b” to types of cancers for diagnosis. +

The above graph, from the British Journal of Cancer, summarizes all FDA-approved artificial intelligence-based devices for oncology and related specialties. The research found that 71 devices have been approved.

As we can see, most of these are for cancer radiology, which makes us correctly assume that it is for detecting cancer through various radiological scans. According to the researchers, of the approved devices, the vast majority (>80%) are related to the complicated area of cancer diagnostics.

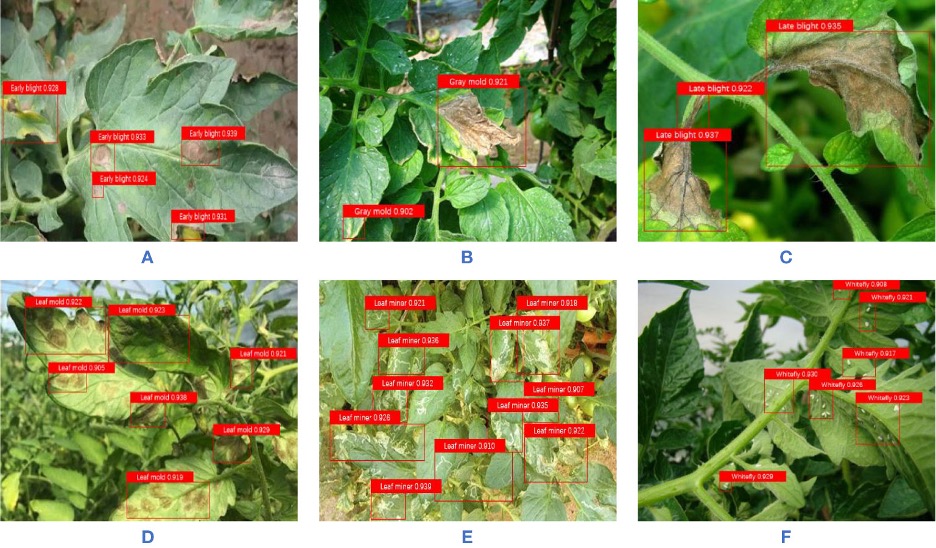

The image above shows a deep learning algorithm trained to analyze MRI images and predict the presence of an IDH1 gene mutation in brain tumors.

Concerning different tumor types that AI-enhanced devices can investigate, most devices are being applied to a broad spectrum of solid malignancies defined as cancer in general (33.8%). However, the specific tumor that counts for the most significant number of AI devices is breast cancer (31.0%), followed by lung and prostate cancer (both 8.5%), colorectal cancer (7.0%), brain tumors (2.8%) and six other types (1.4% each).

Moving Forward with AI

From its origin, AI has shown its capabilities in nearly all scientific branches and continues to possess impressive future growth potential in oncology.

The devices that have already been approved are not conceived as a substitution for classical oncological analysis and diagnosis but as an integrative tool for exceptional cases and improving the management of cancer patients.

A cancer diagnosis has classically represented a starting point from which appropriate therapeutic and disease management approaches are designed. AI-based diagnosis is a step forward and will continue to be an essential focus in ongoing and future development. However, it will likely be expanded to other vital areas, such as drug discovery, drug delivery, therapy administration, and treatment follow-up strategies.

Current cancer types with a specific AI focus (breast, lung, and prostate cancer) are all high in incidence. This focus means that other tumor types have the opportunity for AI diagnosis and treatment improvements, including rare cancers that still lack standardized approaches.

However, rare cancers will take longer to create large and reliable data sets. When grouped, rare cancers are one of the essential categories in precision oncology, and this group will become a growing focus for AI.

With the positive results that have already been seen with AI in oncology, AI should be allowed to expand its reach and provide warranted solutions to cancer-related questions that it has the potential to resolve. If given this opportunity, AI could be harnessed to become the next step in a cancer treatment revolution.

Closing Thoughts

Artificial intelligence (AI) is reshaping many fields, including medicine and the entire landscape of oncology. AI brings to oncology several new opportunities for improving the management of cancer patients.

It has already proven its abilities in diagnosis, as seen by the number of devices in practice and approved by the FDA. The focus of AI has been on the cancers with the highest incidence, but rare cancers amount to a massive avenue of potential when grouped.

The next stage will be to create multidisciplinary platforms that use AI to fight all cancers, including rare tumors. We are at the beginning of the oncology AI revolution.

Disclaimer: The information provided in this article is solely the author’s opinion and not investment advice – it is provided for educational purposes only. By using this, you agree that the information does not constitute any investment or financial instructions. Do conduct your own research and reach out to financial advisors before making any investment decisions.

The author of this text, Jean Chalopin, is a global business leader with a background encompassing banking, biotech, and entertainment. Mr. Chalopin is Chairman of Deltec International Group, www.deltecbank.com.

The co-author of this text, Robin Trehan, has a bachelor’s degree in economics, a master’s in international business and finance, and an MBA in electronic business. Mr. Trehan is a Senior VP at Deltec International Group, www.deltecbank.com.

The views, thoughts, and opinions expressed in this text are solely the views of the authors, and do not necessarily reflect those of Deltec International Group, its subsidiaries, and/or its employees.