Neural implants, also known as brain implants, have been the subject of extensive research in recent years, with the potential to revolutionise healthcare. These devices are designed to interact directly with the brain, allowing for the transmission of signals that can be used to control various functions of the body.

While the technology is still in its early stages, there is growing interest in its potential applications, including treating neurological disorders, enhancing cognitive abilities, and even creating brain-machine interfaces.

According to Pharmi Web, the brain implants market is expected to grow at a CAGR of 12.3% between 2022 and 2032, reaching a valuation of US$18 billion by 2032.

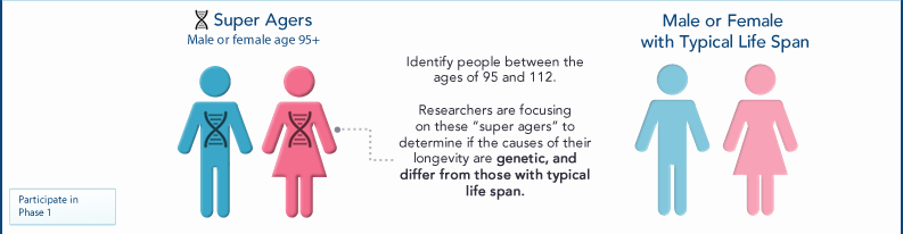

During the forecast period, the market for brain implants is expected to experience significant growth, primarily due to the increasing prevalence of neurological disorders worldwide and the expanding elderly population. As the number of individuals in the ageing demographic continues to rise, so does the likelihood of developing conditions such as Parkinson’s disease, resulting in a surge in demand for brain implants.

This article will explore the technology behind neural implants and the benefits and considerations associated with their use.

Understanding Neural Implants

Neural implants are electronic devices surgically implanted into the brain to provide therapeutic or prosthetic functions. They are designed to interact with the brain’s neural activity by receiving input from the brain or sending output to it. These devices typically consist of a set of electrodes attached to specific brain regions, and a control unit, which processes the signals received from the electrodes.

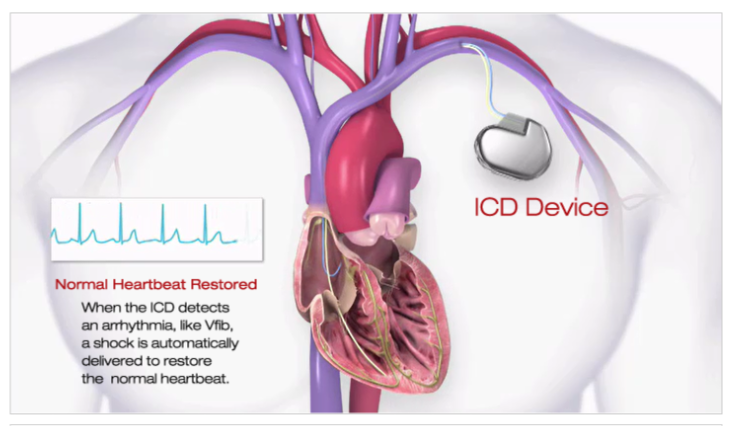

The electrodes in neural implants can be used to either stimulate or record neural activity. Stimulating electrodes send electrical impulses to the brain, which can be used to treat conditions such as Parkinson’s disease or epilepsy. Recording electrodes are used to detect and record neural activity, which can be used for research purposes or to control prosthetic devices.

To function correctly, neural implants require a control unit responsible for processing and interpreting the signals received from the electrodes. The control unit typically consists of a small computer implanted under the skin and a transmitter that sends signals wirelessly to an external device. The external device can adjust the implant’s settings, monitor its performance, or analyse the data collected by the electrodes.

Neural implants can treat neurological disorders, including Parkinson’s disease, epilepsy, and chronic pain. They can also help individuals who have suffered a spinal cord injury or amputation to control prosthetic devices, such as robotic arms or legs.

The Benefits of Neural Implants

Neural implants have the potential to provide a wide range of benefits for individuals suffering from neurological disorders. These benefits include:

Improved quality of life. Neural implants can significantly improve the quality of life for individuals suffering from neurological disorders such as Parkinson’s disease, epilepsy, or chronic pain. By controlling or alleviating the symptoms of these conditions, individuals can experience greater independence, mobility, and overall well-being.

Enhanced cognitive abilities. Neural implants also have the potential to enhance cognitive abilities, such as memory and attention. By stimulating specific regions of the brain, neural implants can help to improve cognitive function, particularly in individuals suffering from conditions such as Alzheimer’s disease or traumatic brain injury.

Prosthetic control. Neural implants can also be used to control prosthetic devices, such as robotic arms or legs. By directly interfacing with the brain, these devices can be controlled with greater precision and accuracy, providing greater functionality and independence for individuals with amputations or spinal cord injuries.

Research. Neural implants can also be used for research purposes, providing insights into the workings of the brain and the underlying mechanisms of neurological disorders. By recording neural activity, researchers can gain a better understanding of how the brain functions and develop new treatments and therapies for a wide range of neurological conditions.

While there are significant benefits associated with neural implants, many challenges and considerations must be considered.

The Challenges

There are several challenges to consider regarding the use of neural implants.

Invasive nature. Neural implants require surgery to be implanted in the brain, which carries inherent risks such as infection, bleeding, and damage to brain tissue. Additionally, the presence of a foreign object in the brain can cause inflammation and scarring, which may affect the long-term efficacy of the implant.

Technical limitations. Neural implants require advanced technical expertise to develop and maintain. Many technical challenges still need to be overcome to make these devices practical and effective. For example, developing algorithms that can accurately interpret the signals produced by the brain is a highly complex task that requires significant computational resources.

Cost. Neural implants can be costly and are often not covered by insurance. This can limit access to this technology for individuals who cannot afford the cost of the implant and associated medical care.

Ethical considerations. Using neural implants raises several ethical considerations, particularly concerning informed consent, privacy, and the potential for unintended consequences. For example, there may be concerns about using neural implants for enhancement or otherwise incorrectly.

Long-term durability. Neural implants must be able to function effectively for extended periods, which can be challenging given the harsh environment of the brain. The long-term durability of these devices is an area of active research and development, with ongoing efforts to develop materials and designs that can withstand the stresses of the brain.

While the challenges associated with neural implants are significant, ongoing research and development in this field are helping to overcome many of these obstacles. As these devices become more reliable, accessible, and affordable, they have the potential to significantly improve the lives of individuals suffering from a wide range of neurological conditions.

Companies Operating in the Neural Implant Space

Several companies are developing neural implants for various applications, including medical treatment, research, and prosthetics.

Neuralink, founded by Elon Musk, is focused on developing neural implants that can help to treat a range of neurological conditions, including Parkinson’s disease, epilepsy, and paralysis. The company’s initial focus is developing a ‘brain-machine interface’ that enables individuals to control computers and other devices using their thoughts.

Blackrock Microsystems develops various implantable devices for neuroscience research and clinical applications. The company’s products include brain implants that can be used to record and stimulate neural activity and devices for deep brain stimulation and other therapeutic applications.

Medtronic is a medical device company that produces a wide range of products, including implantable devices for treating neurological conditions such as Parkinson’s, chronic pain, and epilepsy. The company’s deep brain stimulation devices are the most widely used for treating movement disorders and other neurological conditions.

Synchron is developing an implantable brain-computer interface device that can enable individuals with paralysis to control computers and other devices using their thoughts. The company’s technology is currently being tested in clinical trials to eventually make this technology available to individuals with spinal cord injuries and other forms of paralysis.

Kernel focuses on developing neural implants for various applications, including medical treatment, research, and cognitive enhancement. The company’s initial focus is developing a ‘neuroprosthesis’ that can help treat conditions such as depression and anxiety by directly stimulating the brain.

Closing Thoughts

The next decade for neural implants will likely see significant technological advancements. One central area of development is improving the precision and accuracy of implant placement, which can enhance the efficacy and reduce the risks of these devices. Another area of focus is on developing wireless and non-invasive implant technologies that can communicate with the brain without requiring surgery.

Machine learning and artificial intelligence advancements are also expected to impact neural implants significantly. These technologies can enable the development of more sophisticated and intelligent implants that can adapt to the user’s needs and provide more effective treatment. Additionally, integrating neural implants with other technologies, such as virtual and augmented reality, could lead to exciting new possibilities for treating and enhancing human cognitive function.

The next decade for neural implants will likely see significant progress in the technology and its applications in treating a wide range of neurological and cognitive conditions. However, ethical and regulatory considerations must also be carefully considered as the field advances.

Disclaimer: The information provided in this article is solely the author’s opinion and not investment advice – it is provided for educational purposes only. By using this, you agree that the information does not constitute any investment or financial instructions. Do conduct your own research and reach out to financial advisors before making any investment decisions.

The author of this text, Jean Chalopin, is a global business leader with a background encompassing banking, biotech, and entertainment. Mr. Chalopin is Chairman of Deltec International Group, www.deltec.io.

The co-author of this text, Robin Trehan, has a bachelor’s degree in economics, a master’s in international business and finance, and an MBA in electronic business. Mr. Trehan is a Senior VP at Deltec International Group, www.deltec.io.

The views, thoughts, and opinions expressed in this text are solely the views of the authors, and do not necessarily reflect those of Deltec International Group, its subsidiaries, and/or its employees.