While it is obvious that artificial intelligence (AI) and robotics are different disciplines, robots can perform without AI. However, robotics reaches the next level when AI enters this mix.

We will explain how these disciplines differ and explore spaces where AI is utilized to create envelope-pushing robotic technology.

Robotics in Brief

Robotics is a subset of engineering and computer science where machines are created to perform tasks without human intervention after programming.

This definition is broad, covering everything from a robot that aids in silicon chip manufacturing to the humanoid robots of science fiction, and are already being designed like the Asimo robot from Honda. In global finance, we’ve had robo-advisors working with us for some years already.

Robots have traditionally been used for tasks that humans are incapable of doing efficiently (moving an assembly line’s heavy parts), are repetitive, or are a combination. For example, robots can accomplish the same task thousands of times a day, whereas a human would be slower, get bored, make more mistakes, or be physically unable to complete it.

Robotics and AI

Sometimes these terms are incorrectly used interchangeably, but AI and robotics are very different. In AI, systems mimic the human mind to learn through training to solve problems and make decisions autonomously without needing specific programming (if A, then B).

As we have stated, robots are machines programmed to conduct particular tasks. Generally, most robotics tasks do not require AI, as they are repetitive and predictable and not needing decision-making.

Robotics and AI can, however, coexist. Robotic projects that use AI are in the minority, but such systems are becoming more common and will enhance robotics as AI systems grow in sophistication.

AI-Driven Robots

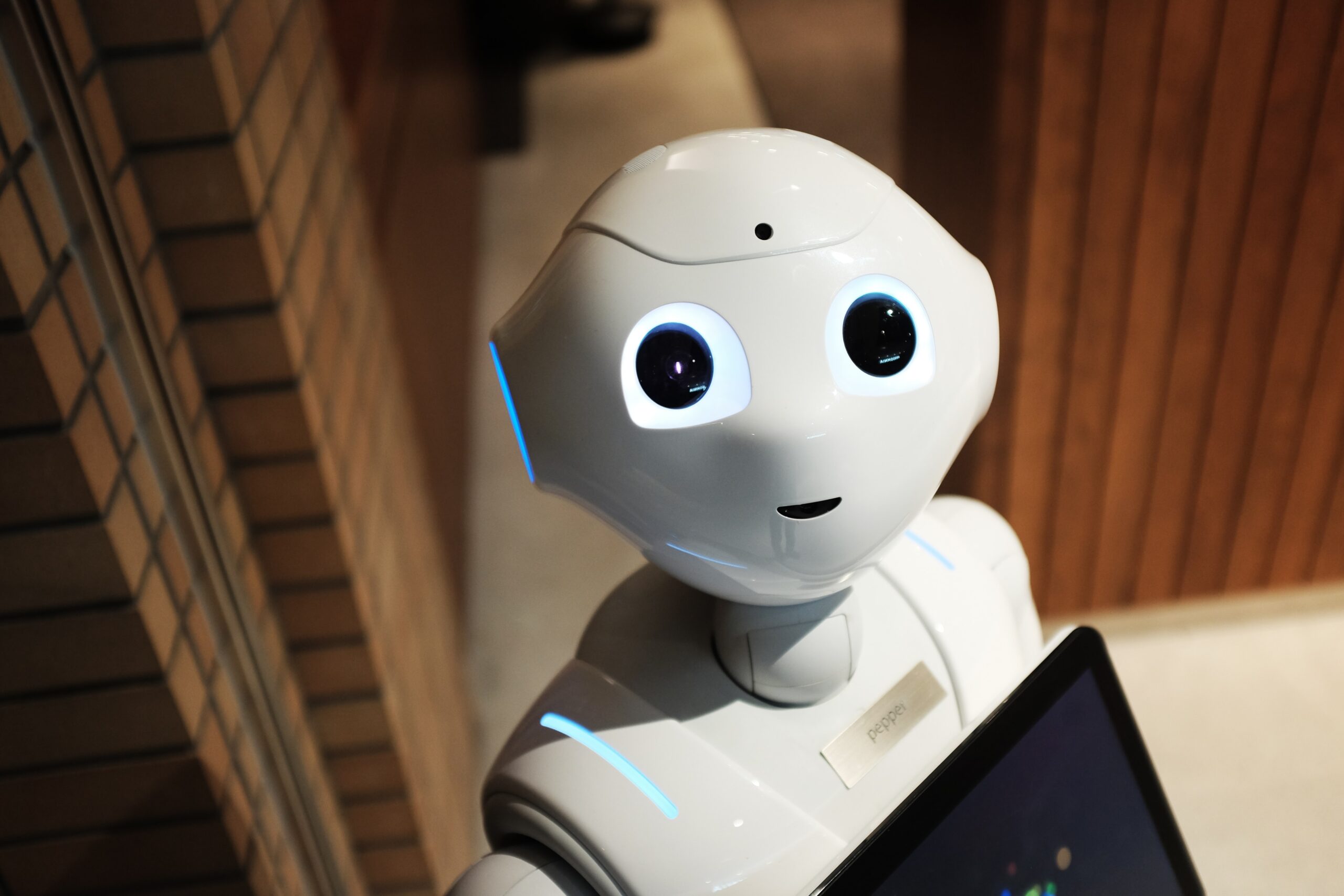

Amazon is testing the newest example of a household robot called Astro. It is a self-driving Echo Show. The robot uses AI to navigate a space autonomously, acting as an observer (using microphones and a periscopic camera) when the owner is not present.

This type of robot is not novel; robotic vacuums have been in our homes, navigating around furniture, for almost a decade. But even these devices are becoming “smarter” with improved AI.

The company behind the robot vacuum Roomba, iRobot, announced a new model that uses AI to spot and avoid pet poop.

Robotics and AI in Manufacturing

Robotic AI manufacturing, also known as Industry 4.0, is growing in scope and will become transformational. This fourth industrial revolution may be as simple as a robot navigating its way around a warehouse to systems like that of Vicarious, who designs turnkey robotic solutions to solve tasks too complex for programmed-only automation.

Vicarious is not alone in this service. For example, the Site Monitoring Robot from Scaled Robotics can patrol a construction site, scanning and analyzing the data for potential quality issues. In addition, the Shadow Dexterous Hand is agile enough to pick soft fruit from trees without crushing it while learning from human examples, potentially making it a game changer in the pharmaceutical industry.

Robotics and AI in Business

For any business needing to send things within a four-mile radius, Starship Technologies has delivery robots equipped with sensors, mapping systems, and AI. Their wheeled robot can determine the best routes to take on the fly while avoiding the dangers of its navigating world.

In the food service space, robots are becoming even more impressive. Flippy, the robotic chef from Miso Robotics, uses 3D and thermal vision, learning from the kitchen it’s in, and acquiring new skills over time, skills well beyond the name it earned by learning to flip burgers.

Robotics and AI in Healthcare

Front-line medical professionals are tired and overworked. Unfortunately, in healthcare, fatigue can lead to fatal consequences.

Robots don’t tire, which makes them a perfect substitute. In addition, Waldo Surgeon robots perform operations with steady “hands” and incredible accuracy.

Robots can be helpful in medicine far beyond a trained surgeon’s duties. More basic lower-skilled work performed by robots will allow medical professionals to free up time and focus on care.

The Moxi robot from Diligent Robotics can do many tasks, from running patient samples to distributing PPE, giving doctors and nurses more of this valuable time. Cobionix developed a needleless vaccination administering robot that does not require human supervision.

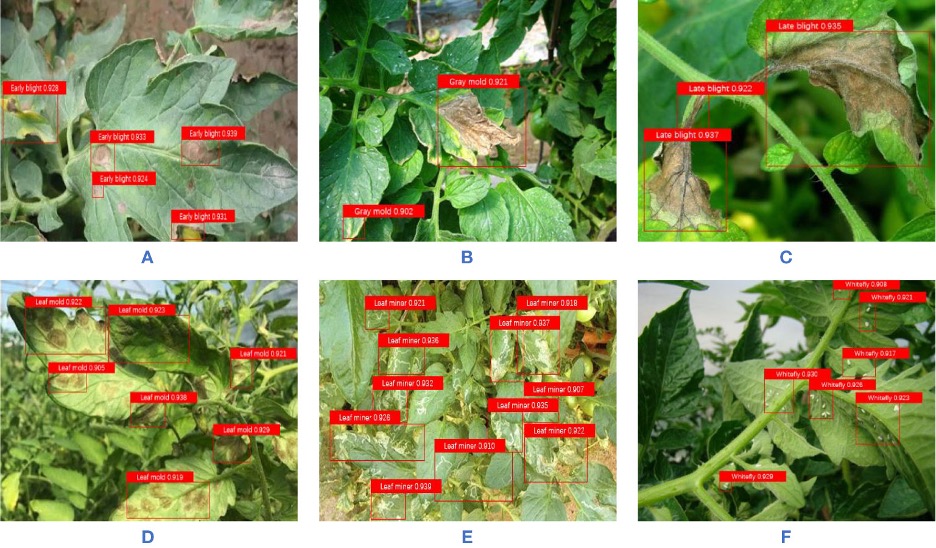

Robotics and AI in Agriculture

The use of robotics in agriculture will reduce the effect of persistent labor shortages and worker fatigue in the sector. But there is an additional advantage that robots can bring to agriculture, sustainability.

Iron Ox uses robotics with AI to ensure that every plant gets the optimal level of water, sunshine, and nutrients so they will grow to their fullest potential. When each plant is analyzed using AI, less water and fertilizer are required producing less waste.

The AI will learn from its recorded data improving that farm’s yields with every new harvest.

The Agrobot E Series has 24 robotic arms that it can use to harvest strawberries, and it uses its AI to determine the ripeness of the fruit while doing so.

Robotics and AI in Aerospace

NASA has been working to improve its Mars rovers’ AI while working on a robot to repair satellites.

Other companies are also working on autonomous rovers. Ispace’s rover uses onboard tools, and maybe the device hired to lay the ‘Moon Valley’ colony’s future foundation.

Additional companies and agencies are trying to enhance space exploration with AI-controlled robots. For example, the CIMON from Airbus is like Siri in space. It’s designed to aid astronauts in their day-to-day duties, reducing stress with speech recognition and operating as a system for problem detection.

When to Avoid AI?

The fundamental argument against using AI in robots is that, for most tasks, AI is unnecessary. The tasks that are currently being done by robots are repetitive and predictable; adding AI to them would complicate the process, likely making it less efficient and more costly.

There is a caveat to this. To date, most robotic systems have been designed with AI limits in mind when they were implemented. They were created to do a single programmed task because they could not do anything more complex.

However, with the advances in AI, the lines between AI and robotics are blurring. Outside of business- or healthcare-driven uses, we’ve noticed how AI facilitates the relatively new, lucrative field of algorithmic trading becoming increasingly available to retail investors.

Closing Thoughts

AI and robotics are different but related fields. AI systems mimic the human mind, while robots help complete tasks more efficiently. Robots can include an AI element, but they can exist independently too.

Robots designed to perform simple and repetitive tasks would not benefit from AI. However, many AI-free robotic systems were created, accounting for the limitations of AI at their time of implementation. As the technology improves, these legacy systems may benefit from an AI upgrade, and new systems will be more likely to build an AI component into their design. This change will result in the marrying of the two disciplines.

We have seen how AI and robotics can aid in several different sectors, keeping us safer, wealthier, and healthier while making some jobs easier or performed more efficiently entirely by robots. However, we also consider a possible change in employment structure. People will be outsourced to robots, and they must be accounted for with training and other options for employment.

With the combination of AI and robotics, significant changes are on our horizon. This combination represents the very forefront of innovation.

Disclaimer: The information provided in this article is solely the author’s opinion and not investment advice – it is provided for educational purposes only. By using this, you agree that the information does not constitute any investment or financial instructions. Do conduct your own research and reach out to financial advisors before making any investment decisions.

The author of this text, Jean Chalopin, is a global business leader with a background encompassing banking, biotech, and entertainment. Mr. Chalopin is Chairman of Deltec International Group, www.deltecbank.com.

The co-author of this text, Robin Trehan, has a bachelor’s degree in economics, a master’s in international business and finance, and an MBA in electronic business. Mr. Trehan is a Senior VP at Deltec International Group, www.deltecbank.com.

The views, thoughts, and opinions expressed in this text are solely the views of the authors, and do not necessarily reflect those of Deltec International Group, its subsidiaries, and/or its employees.